GitOps Continuous Deployment: FluxCD Advanced CRDs¶

FluxCD is a powerful ecosystem of GitOps operators that can be enabled on-demand as per the requirement of your environment. It enables you to opt-in for the features you need and to disable the ones you don't.

As the complexity and requirement of your environment grows, so does the need for extra tooling to cover the implementation of the features you need.

FluxCD comes with more than just the support for Kustomization and HelmRelease. With FluxCD, you can also manage your Docker images as new versions get built. You can also get notified of the events that happen on your behalf by the FluxCD operators.

Stick till the end to see how you can take your Kubernetes cluster to the next level using advanced FluxCD CRDs.

Introduction¶

We have covered the beginner's guide to FluxCD in an earlier post.

This blog post will continue from where we left off and covers the advanced CRDs not included in the first post.

Specifically, we will mainly cover the Image Automation Controller1 and the Notification Controller2 in this post.

Using the provided CRDs by these operators, we will be able to achieve the following inside our Kubernetes cluster:

- Fetch the latest tags of our specified Docker images

- Update the Kustomization3 to use the latest Docker image tag based on the desired tag pattern

- Notify and/or alert external services (e.g. Slack, Discord, etc.) based on the severity of the events happening within the cluster

If you're as pumped as I am, let's not waste any more second and dive right in!

Pre-requisites¶

Make sure you have the following setup ready before going forward:

- A Kubernetes cluster accessible from the internet (v1.30 as of writing) Feel free to follow our earlier guides if you need assistance:

- FluxCD operator installed. Follow our earlier blog post to get started: Getting Started with GitOps and FluxCD

- Either Gateway API4 or an Ingress Controller5 installed on your cluster. We will need this internet-accessible endpoint to receive the webhooks from the GitHub repository to our cluster.

- Both the Extenal Secrets Operator as well as cert-manager installed on your cluster. Although, feel free to use any alternative that suits your environment best.

- Optionally, GitHub CLI v26 installed for TF code authentication. The alternative is to use GitHub PAT, which I'm not a big fan of!

- Optionally a GitHub account, although any other Git provider will do when it comes to the Source Controller7.

Source, Image Automation & Notification Controllers 101  ¶

¶

Before getting hands-on, a bit of explanation is in order.

The Source Controller8 in FluxCD is responsible for fetching the artifacts and the resources from the external sources. It is called Source controller because it provides the resources needed for the rest of the FluxCD controllers.

These Sources can be of various types, such as GitRepository, HelmRepository, Bucket, etc. It will need the required auth and permission(s) to access those repositories, but, once given proper access, it will mirror the contents of said sources to your cluster so that you can have seamless integration from your external repositories right into the Kubernetes cluster.

The Image Automation Controller9 is responsible for managing the Docker images. It fetches the latest tags, groups them based on defined patterns and criteria, and updates the target resources (e.g. Kustomization) to use the latest image tags; this is how you achieve the continuous deployment of your Docker images.

The Notification Controller10, on the other hand, is responsible for both receiving and sending notifications. It can receive the events from the external sources11, e.g. GitHub, and acts upon them as defined in its CRDs. It can also send notifications from your cluster to the external services. This can include sending notifications or alerts to Slack, Discord, etc.

This is just an introduction and sounds a bit vague. So let's get hands-on and see how we can use these controllers in our cluster.

Application Scaffold¶

Since you will see a lot of code snippets in this post, here's the directory structure you better be prepared for:

.

├── echo-server/

├── fluxcd-secrets/

├── github-webhook/

├── kube-prometheus-stack/

├── kustomize/

│ ├── base/

│ └── overlays/

│ └── dev/

├── notifications/

├── webhook-receiver/

└── webhook-token/

The application we'll deploy today is a Rust

echo-server. The rest of the configurations are complementary to the CD of this application.

Step 1: Required Secrets¶

The first step is to create a couple of required secrets we'll be needing for our application, as well as for all the other controllers responsible for reconciling the deployment and its image autmation.

Specifically, we'll need three secrets at this stage:

- GitHub Deploy Key12: This will be used by the Source Controller to fetch the source code artifacts from GitHub and stores them in the cluster. The rest of the controllers will need to reference this GitHub repository during initialization in their YAML manifest. It will also use this Deploy Key to commit the changes back to the repository (more on that in a bit).

- User GPG Key13: This is the key that the Image Update Automation will use to sign the commits when changing target image tag of our application once a new Docker iamge is built.

- GitHub Container Registry token: The GHCR token is used by the Image Automation controller to fetch the latest tags of the Docker images from the GitHub Container Registry. This will be a required step for private repositories, however, you can skip it for public repos.

We will employ External Secrets Operator to fetch our secrets from AWS SSM. We have already covered the installation of ESO in a previous post and using that knowledge, we'll only need to place the secrets in the AWS, and instruct the operator to fetch and feed them to our application.

variable "github_pat" {

type = string

nullable = false

description = "GitHub Personal Access Token with `read:packages` permission."

}

variable "gpg_key_passphrase" {

type = string

default = null

}

variable "github_owner" {

type = string

default = "developer-friendly"

description = "Can be an organization or a user."

}

variable "github_owner_individual" {

type = string

default = "developer-friendly-bot"

description = "Can ONLY be a user."

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.49"

}

github = {

source = "integrations/github"

version = "~> 6.2"

}

gpg = {

source = "Olivr/gpg"

version = "~> 0.2"

}

tls = {

source = "hashicorp/tls"

version = "~> 4.0"

}

}

required_version = "< 2"

}

provider "github" {

owner = var.github_owner

}

provider "github" {

alias = "individual"

owner = var.github_owner_individual

}

####################

# Deploy key

####################

resource "tls_private_key" "this" {

algorithm = "ED25519"

}

resource "github_repository_deploy_key" "this" {

repository = "echo-server"

title = "Developer Friendly Bot"

key = tls_private_key.this.public_key_openssh

read_only = false

}

resource "aws_ssm_parameter" "deploy_key" {

name = "/github/echo-server/deploy-key"

type = "SecureString"

value = tls_private_key.this.private_key_pem

}

####################

# GHCR Secret

####################

resource "aws_ssm_parameter" "ghcr_token" {

name = "/github/echo-server/ghcr-token"

type = "SecureString"

value = var.github_pat

}

####################

# GPG key

####################

resource "gpg_private_key" "this" {

name = "Developer Friendly Bot"

email = "[email protected]"

passphrase = var.gpg_key_passphrase

rsa_bits = 2048

}

resource "github_user_gpg_key" "this" {

provider = github.individual

armored_public_key = gpg_private_key.this.public_key

}

resource "aws_ssm_parameter" "gpg_key" {

name = "/github/gpg-keys/developer-friendly-bot"

type = "SecureString"

value = gpg_private_key.this.private_key

}

output "github_deploy_key" {

value = {

id = github_repository_deploy_key.this.id

title = github_repository_deploy_key.this.title

repository = github_repository_deploy_key.this.repository

}

}

output "ssm_name" {

value = {

ghcr_token = aws_ssm_parameter.ghcr_token.name

deploy_key = aws_ssm_parameter.deploy_key.name

gpg_key = aws_ssm_parameter.gpg_key.name

}

}

Notice that we're defining two providers with differing aliases14 for our GitHub provider. For that, there are a couple of worthy notes to mention:

- We are using GitHub CLI for the API authentication of our TF code to the GitHub. The main and default provider we use is

developer-friendlyorganization and the other isdeveloper-friendly-botnormal user. - The GitHub Deploy Key12 creation API call is something even an organization account can do. But for the creation of the User GPG Key13, we need to send the requests from a non-organization account, i.e., a normal user; that is the reason for using two providers instead of one. You could argue that we could create all resources using the normal account, however, we are employing the principle of least privilege here.

- For the GitHub CLI authentication to work, beside the CLI installation, you need to grant your CLI access to your GitHub account. You can see the command and its resulting screenshot below:

And the web browser page:

Applying the stack above is straightforward:

Step 2: Repository Set Up¶

Now that we have our secrets ready in AWS SSM, we can go ahead and create the FluxCD GitRepository.

Remember we created GitHub Deploy Key earlier? We are passing it to the cluster this way:

apiVersion: external-secrets.io/v1beta1

kind: ExternalSecret

metadata:

name: echo-server-git

spec:

data:

- remoteRef:

key: /github/echo-server/deploy-key

secretKey: sshKey

refreshInterval: 5m

secretStoreRef:

kind: ClusterSecretStore

name: aws-parameter-store

target:

creationPolicy: Owner

deletionPolicy: Retain

immutable: false

template:

data:

identity: "{{ .sshKey | toString -}}"

known_hosts: |

github.com ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIOMqqnkVzrm0SdG6UOoqKLsabgH5C9okWi0dh2l9GKJl

github.com ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBEmKSENjQEezOmxkZMy7opKgwFB9nkt5YRrYMjNuG5N87uRgg6CLrbo5wAdT/y6v0mKV0U2w0WZ2YB/++Tpockg=

github.com ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCj7ndNxQowgcQnjshcLrqPEiiphnt+VTTvDP6mHBL9j1aNUkY4Ue1gvwnGLVlOhGeYrnZaMgRK6+PKCUXaDbC7qtbW8gIkhL7aGCsOr/C56SJMy/BCZfxd1nWzAOxSDPgVsmerOBYfNqltV9/hWCqBywINIR+5dIg6JTJ72pcEpEjcYgXkE2YEFXV1JHnsKgbLWNlhScqb2UmyRkQyytRLtL+38TGxkxCflmO+5Z8CSSNY7GidjMIZ7Q4zMjA2n1nGrlTDkzwDCsw+wqFPGQA179cnfGWOWRVruj16z6XyvxvjJwbz0wQZ75XK5tKSb7FNyeIEs4TT4jk+S4dhPeAUC5y+bDYirYgM4GC7uEnztnZyaVWQ7B381AK4Qdrwt51ZqExKbQpTUNn+EjqoTwvqNj4kqx5QUCI0ThS/YkOxJCXmPUWZbhjpCg56i+2aB6CmK2JGhn57K5mj0MNdBXA4/WnwH6XoPWJzK5Nyu2zB3nAZp+S5hpQs+p1vN1/wsjk=

mergePolicy: Replace

type: Opaque

The format of the Secret that FluxCD expects for GitRepository is documented on their documentation and you can use other forms of authentication as needed15. We are using GitHub Deploy Key here as they are more flexible when it comes to revoking access, as well as granting write access to the repository12.

The Known Hosts value is coming from the GitHub SSH key fingerprint16. The bad news is that you will have to manually change them if they change theirs!

And using the resuling generated Kubernetes Secret from the ExternalSecret above, we are creating the GitRepository using SSH instead of HTTPS; the reason is that the GitHub Deploy Key generated earlier in our TF code has write access. We'll talk about why in a bit.

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: echo-server

spec:

interval: 1m

ref:

branch: main

secretRef:

name: echo-server-git

timeout: 60s

url: ssh://[email protected]/developer-friendly/echo-server

And now let's create this stack:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: echo-server-root

namespace: flux-system

spec:

force: true

interval: 5m

path: ./echo-server

prune: true

sourceRef:

kind: GitRepository

name: flux-system

wait: false

Buckle Up!

There is going to be a lot of code snippets. Get ready to be bombarded with all that we had stored in the cannon.

Step 3: Application Deployment¶

Now that we have our GitRepository set up, we can deploy the application.

There is not much to say about the base Kustomization. It is a normal application like any other.

For your reference, here's the base Kustomization:

resources:

- service.yml

- deployment.yml

replacements:

- source:

kind: Deployment

name: echo-server

fieldPath: spec.template.metadata.labels

targets:

- select:

kind: Service

name: echo-server

fieldPaths:

- spec.selector

options:

create: true

- source:

kind: ConfigMap

name: echo-server

fieldPath: data.PORT

targets:

- select:

kind: Deployment

name: echo-server

fieldPaths:

- spec.template.spec.containers.[name=echo-server].ports.[name=http].containerPort

configMapGenerator:

- name: echo-server

envs:

- configs.env

commonLabels:

app: echo-server

tier: backend

images:

- name: ghcr.io/developer-friendly/echo-server

Now, let's go ahead and see what we need to create in our dev environment.

Notice the referencing AWS SSM key in our ExternalSecret resource which is targeting the same value as we created earlier in our fluxcd-secrets TF stack.

apiVersion: external-secrets.io/v1beta1

kind: ExternalSecret

metadata:

name: echo-server-docker

spec:

data:

- remoteRef:

key: /github/echo-server/ghcr-token

secretKey: token

refreshInterval: 5m

secretStoreRef:

kind: ClusterSecretStore

name: aws-parameter-store

target:

creationPolicy: Owner

deletionPolicy: Retain

immutable: false

template:

data:

.dockerconfigjson: |

{

"auths": {

"ghcr.io": {

"username": "developer-friendly-bot",

"password": "{{ .token | toString }}",

"auth": "{{ printf "developer-friendly-bot:%s" .token | b64enc }}"

}

}

}

mergePolicy: Replace

type: kubernetes.io/dockerconfigjson

apiVersion: external-secrets.io/v1beta1

kind: ExternalSecret

metadata:

name: echo-server-gpgkey

spec:

data:

- remoteRef:

key: /github/gpg-keys/developer-friendly-bot

secretKey: gitGpgSigningKey

refreshInterval: 5m

secretStoreRef:

kind: ClusterSecretStore

name: aws-parameter-store

target:

creationPolicy: Owner

deletionPolicy: Retain

immutable: false

template:

data:

git.asc: '{{ .gitGpgSigningKey | toString -}}'

mergePolicy: Replace

type: Opaque

The following HTTPRoute is using the Gateway we have created in our last week's guide. Make sure to check it out if you haven't already.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: echo-server

spec:

hostnames:

- echo.dev.developer-friendly.blog

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: developer-friendly-blog

namespace: cert-manager

sectionName: https

rules:

- backendRefs:

- kind: Service

name: echo-server

port: 80

filters:

- responseHeaderModifier:

set:

- name: Strict-Transport-Security

value: max-age=31536000; includeSubDomains; preload

type: ResponseHeaderModifier

matches:

- path:

type: PathPrefix

value: /

The PLACEHOLDER in the following ImagePolicy below will be replaced by the Kustomization in a bit.

Notice the pattern we are requesting, which MUST be the same as you build in your CI pipeline.

apiVersion: image.toolkit.fluxcd.io/v1beta2

kind: ImagePolicy

metadata:

name: echo-server

spec:

imageRepositoryRef:

name: echo-server

namespace: PLACEHOLDER

filterTags:

pattern: ^[0-9]+$ # github run-id, monotonic per repository

policy:

numerical:

order: asc

Creating an ImageRepository for a private Docker image is what I consider to be a superset of the public ImageRepository. As such, I will only cover the private ImageRepository in this blog post.

Since the Git provider will be GitHub, we will need a GitHub PAT; I really wish GitHub would provide official OpenID Connect support(1) someday to get rid of all these tokens lying around in our environments!

- There is an un-official OIDC support for GitHub as we speak17. A topic for a future post.

- This one: kustomize/overlays/dev/externalsecret-docker.yml

apiVersion: external-secrets.io/v1beta1 kind: ExternalSecret metadata: name: echo-server-docker spec: data: - remoteRef: key: /github/echo-server/ghcr-token secretKey: token refreshInterval: 5m secretStoreRef: kind: ClusterSecretStore name: aws-parameter-store target: creationPolicy: Owner deletionPolicy: Retain immutable: false template: data: .dockerconfigjson: | { "auths": { "ghcr.io": { "username": "developer-friendly-bot", "password": "{{ .token | toString }}", "auth": "{{ printf "developer-friendly-bot:%s" .token | b64enc }}" } } } mergePolicy: Replace type: kubernetes.io/dockerconfigjson

The referenced Kubernetes Secret in the ImageRepository above, and the one referencing the GPG Key Secret are both fed into the cluster by the ESO that we have deployed in our cluster previously.

The following ImageUpdateAutomation resource will require the write access to the repository; that's where the write access of the GitHub Deploy Key we mentioned earlier comes into play.

apiVersion: image.toolkit.fluxcd.io/v1beta1

kind: ImageUpdateAutomation

metadata:

name: echo-server

spec:

interval: 1m

sourceRef:

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

name: echo-server

namespace: flux-system

git:

checkout:

ref:

branch: main

commit:

messageTemplate: |

[bot] Automated image update

Files:

{{ range $filename, $_ := .Updated.Files -}}

- {{ $filename }}

{{ end -}}

Images:

{{ range .Updated.Images -}}

- {{.}}

{{ end }}

[skip ci]

author:

email: [email protected]

name: Developer Friendly Bot | Dev

signingKey:

secretRef:

name: echo-server-gpgkey (1)

update:

path: kustomize/overlays/dev

strategy: Setters

- The Kubernetes Secret generated from this ExternalSecret: kustomize/overlays/dev/externalsecret-gpgkey.yml

apiVersion: external-secrets.io/v1beta1 kind: ExternalSecret metadata: name: echo-server-gpgkey spec: data: - remoteRef: key: /github/gpg-keys/developer-friendly-bot secretKey: gitGpgSigningKey refreshInterval: 5m secretStoreRef: kind: ClusterSecretStore name: aws-parameter-store target: creationPolicy: Owner deletionPolicy: Retain immutable: false template: data: git.asc: '{{ .gitGpgSigningKey | toString -}}' mergePolicy: Replace type: Opaque

resources:

- ../../base

- externalsecret-docker.yml

- externalsecret-gpgkey.yml

- imagerepository.yml

- imagepolicy.yml

- imageupdateautomation.yml

- httproute.yml

commonLabels:

env: dev

images:

- name: ghcr.io/developer-friendly/echo-server

newTag: "9050352340" # {"$imagepolicy"(1): "default:echo-server:tag"}

namespace: default

patches:

- patch: |

- op: replace

path: /spec/imageRepositoryRef/namespace

value: default

target:

group: image.toolkit.fluxcd.io

version: v1beta2

kind: ImagePolicy

name: echo-server

- The ImagePolicy created here:

Image Policy Tagging¶

Did you notice the line with the following commented value:

Don't be mistaken!

This is not a comment18. This is a metadata that FluxCD understands and uses to update the Kustomization newTag field with the latest tag of the Docker image repository.

For your reference, here's the allowed references:

{"$imagepolicy": "<policy-namespace>:<policy-name>"}{"$imagepolicy": "<policy-namespace>:<policy-name>:tag"}{"$imagepolicy": "<policy-namespace>:<policy-name>:name"}

To understand this better, let's take look at the created ImageRepository first:

apiVersion: image.toolkit.fluxcd.io/v1beta2

kind: ImageRepository

metadata:

creationTimestamp: "2024-05-11T13:38:46Z"

finalizers:

- finalizers.fluxcd.io

generation: 1

labels:

env: dev

kustomize.toolkit.fluxcd.io/name: echo-server

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: echo-server

namespace: default

resourceVersion: "2877651"

uid: ea1301e1-ae66-4261-a88d-aae5d46eda5a

spec:

exclusionList:

- ^.*\.sig$

image: ghcr.io/developer-friendly/echo-server

interval: 1m

provider: generic

secretRef:

name: echo-server-docker

status:

canonicalImageName: ghcr.io/developer-friendly/echo-server

conditions:

- lastTransitionTime: "2024-05-12T09:46:48Z"

message: 'successful scan: found 14 tags'

observedGeneration: 1

reason: Succeeded

status: "True"

type: Ready

lastHandledReconcileAt: "2024-05-12T10:37:16.872961325+07:00"

lastScanResult:

latestTags:

- latest

- f915598

- d85c754

- cf17395

- "9050352340"

- "9044139623"

- "9042583530"

- "9042393345"

- "9042110608"

- "9041904476"

scanTime: "2024-05-12T12:26:49Z"

tagCount: 14

observedExclusionList:

- ^.*\.sig$

observedGeneration: 1

Out of all these scanned images, the following are the ones that we care about in our dev environment.

apiVersion: image.toolkit.fluxcd.io/v1beta2

kind: ImagePolicy

metadata:

creationTimestamp: "2024-05-11T13:38:46Z"

finalizers:

- finalizers.fluxcd.io

generation: 1

labels:

env: dev

kustomize.toolkit.fluxcd.io/name: echo-server

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: echo-server

namespace: default

resourceVersion: "2857234"

uid: af1a820c-5bcf-4a2c-8648-5a9d4edf4372

spec:

filterTags:

pattern: ^[0-9]+$

imageRepositoryRef:

name: echo-server

namespace: default

policy:

numerical:

order: asc

status:

conditions:

- lastTransitionTime: "2024-05-12T09:46:48Z"

message:

Latest image tag for 'ghcr.io/developer-friendly/echo-server' updated

from 9044139623 to 9050352340

observedGeneration: 1

reason: Succeeded

status: "True"

type: Ready

latestImage: ghcr.io/developer-friendly/echo-server:9050352340

observedGeneration: 1

observedPreviousImage: ghcr.io/developer-friendly/echo-server:9044139623

If you remember from our ImagePolicy earlier, we have created the pattern so that the Docker images are all having tags that are numerical only and the highest number is the latest.

Here's the snippet from the ImagePolicy again:

filterTags:

pattern: ^[0-9]+$ # github run-id, monotonic per repository

policy:

numerical:

order: asc

GitHub CI Workflow¶

To elaborate further, this is the piece of GitHub CI definition that creates the image with the exact tag that we are expecting:

- name: Build and push Docker image

uses: docker/build-push-action@v5

with:

context: .

labels: |

${{ steps.meta.outputs.labels }}

org.opencontainers.image.description=${{ steps.readme.outputs.content }}

push: ${{ github.ref == 'refs/heads/main' }}

platforms: linux/amd64,linux/arm64

tags: |

${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}:${{ steps.short-sha.outputs.short-sha }}

${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}:${{ github.run_id }}

${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}:latest

${{ env.DOCKER_REPOSITORY }}:${{ steps.short-sha.outputs.short-sha }}

${{ env.DOCKER_REPOSITORY }}:latest

This CI definition will create images as you have seen in the status of the ImagePolicy, in the following format:

You can employ other techniques as well. For example, you can use Semantic Versioning19 as a pattern, and optionally extract only a part of the tag to be used in the Kustomization(1).

- Perhaps a topic for another day.

Full CI Definition

name: ci

concurrency:

cancel-in-progress: true

group: ci-${{ github.event_name }}-${{ github.ref_name }}

on:

push:

branches:

- main

env:

REGISTRY: ghcr.io

GITHUB_REPOSITORY: ${{ github.repository }}

DOCKER_REPOSITORY: developerfriendly/${{ github.event.repository.name }}

permissions:

contents: read

jobs:

build-docker:

permissions:

contents: read

packages: write

security-events: write

runs-on: ubuntu-latest

steps:

- name: Checkout repo

uses: actions/checkout@v4

- name: Set up QEMU needed for Docker

uses: docker/setup-qemu-action@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Login to GitHub Container Registry

uses: docker/login-action@v3

with:

password: ${{ secrets.GITHUB_TOKEN }}

registry: ${{ env.REGISTRY }}

username: ${{ github.actor }}

- id: readme

name: Read README

uses: actions/github-script@v7

with:

github-token: ${{ secrets.GITHUB_TOKEN }}

script: |

'use strict'

const { promises: fs } = require('fs')

const main = async () => {

const path = 'README.md'

let content = await fs.readFile(path, 'utf8')

core.setOutput('content', content)

}

main().catch(err => core.setFailed(err.message))

- name: Login to Docker hub

uses: docker/login-action@v3

with:

password: ${{ secrets.DOCKERHUB_PASSWORD }}

registry: docker.io

username: ${{ secrets.DOCKERHUB_USERNAME }}

- id: meta

name: Docker metadata

uses: docker/metadata-action@v5

with:

images: |

${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}

- id: short-sha

name: Set image tag

run: |

echo "short-sha=$(echo ${{ github.sha }} | cut -c 1-7 )" >> $GITHUB_OUTPUT

- name: Build and push Docker image

uses: docker/build-push-action@v5

with:

context: .

labels: |

${{ steps.meta.outputs.labels }}

org.opencontainers.image.description=${{ steps.readme.outputs.content }}

push: ${{ github.ref == 'refs/heads/main' }}

platforms: linux/amd64,linux/arm64

tags: |

${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}:${{ steps.short-sha.outputs.short-sha }}

${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}:${{ github.run_id }}

${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}:latest

${{ env.DOCKER_REPOSITORY }}:${{ steps.short-sha.outputs.short-sha }}

${{ env.DOCKER_REPOSITORY }}:latest

- name: Docker Scout - cves

uses: docker/scout-action@v1

with:

command: cves

ignore-unchanged: true

image: ${{ env.REGISTRY }}/${{ env.GITHUB_REPOSITORY }}:${{ github.run_id }}

only-fixed: true

only-severities: medium,high,critical

sarif-file: sarif.output.json

summary: true

- name: Upload SARIF to GitHub Security tab

uses: github/codeql-action/upload-sarif@v3

with:

sarif_file: sarif.output.json

Be sure to deploy the app.

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: echo-server

namespace: flux-system

spec:

force: true

interval: 5m

path: ./kustomize/overlays/dev

prune: true

sourceRef:

kind: GitRepository

name: flux-system

wait: false

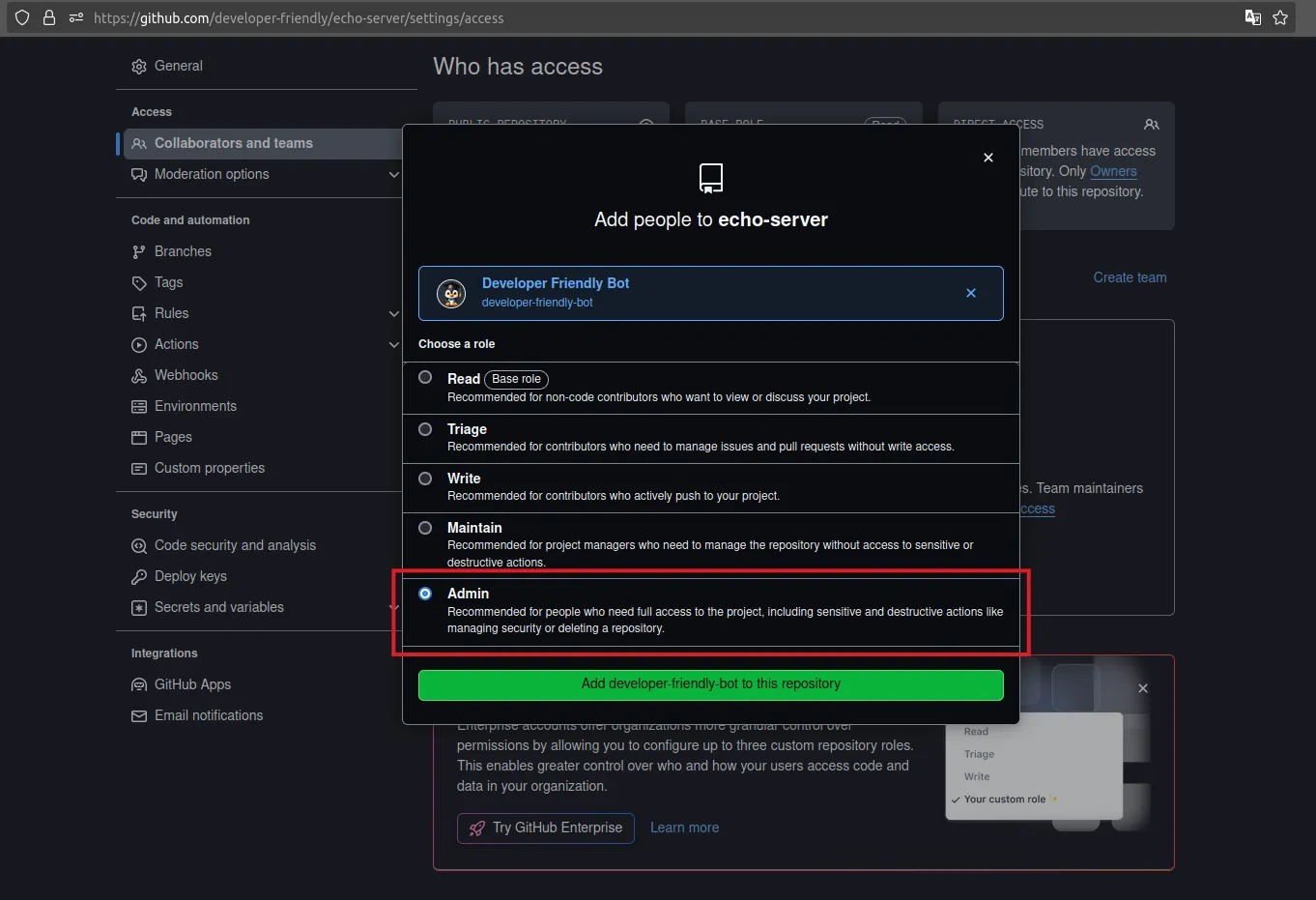

Note that in order for the Deploy Key write access to work, the bot user need to have write access to the repository. In our case, we are also using the same account to create the GitHub Deploy Key, which forces us to grant it the admin access as you see below.

Step 4: Receiver and Webhook¶

FluxCD allows you to configure a Receiver so that external services can trigger the controllers of FluxCD to reconcile before the configured interval.

This can be greatly beneficial when you want to deploy the changes as soon as they are pushed to the repository. For example, a push to the main branch, which, in turn will trigger a webhook from the Git repository to your Kubernetes cluster.

The important note to mention here is that the endpoint has to be publicly accessible through the internet. Of course you are going to protect it behind an authentication system using secrets, which we'll see in a bit.

First things first, let's create the Receiver so that the cluster is ready before any webhook is sent.

Generate the Secret¶

We need a trust relationship between the GitHub webhook system and our cluster. This comes in the form of including a token that only the two parties know of.

variable "token_length" {

description = "The length of the token"

type = number

default = 32

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.49"

}

random = {

source = "hashicorp/random"

version = "~> 3.6"

}

}

required_version = "< 2"

}

resource "random_password" "this" {

length = var.token_length

special = false

}

resource "aws_ssm_parameter" "this" {

name = "/github/developer-friendly/blog/flux-system/receiver/token"

value = random_password.this.result

type = "SecureString"

}

Create the Receiver¶

Now that we have the secret in our secrets management system, let's create the Receiver to be ready for all the GitHub webhook triggers.

apiVersion: external-secrets.io/v1beta1

kind: ExternalSecret

metadata:

name: webhook-token

spec:

data:

- remoteRef:

key: /github/developer-friendly/blog/flux-system/receiver/token

secretKey: token (1)

refreshInterval: 5m

secretStoreRef:

kind: ClusterSecretStore

name: aws-parameter-store

target:

creationPolicy: Owner

deletionPolicy: Retain

immutable: false

template:

mergePolicy: Replace

type: Opaque

- This secret was created here in our TF code:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: github-receiver

spec:

hostnames:

- 3fd76690-8601-4894-a6e4-057f62e58551.developer-friendly.blog

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: developer-friendly-blog

namespace: cert-manager

sectionName: https

rules:

- backendRefs:

- kind: Service

name: notification-controller

port: 80

filters:

- responseHeaderModifier:

set:

- name: Strict-Transport-Security

value: max-age=31536000 ; includeSubDomains; preload

type: ResponseHeaderModifier

matches:

- path:

type: PathPrefix

value: /

apiVersion: notification.toolkit.fluxcd.io/v1

kind: Receiver

metadata:

name: github-receiver

spec:

events:

- push

- ping

interval: 10m

resources:

- apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

name: echo-server

namespace: flux-system

secretRef:

name: webhook-token

type: github

resources:

- externalsecret.yml

- httproute.yml

- receiver.yml

namespace: flux-system

An now, let's apply this stack.

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: webhook-receiver

namespace: flux-system

spec:

force: true

interval: 5m

path: ./webhook-receiver

prune: true

sourceRef:

kind: GitRepository

name: flux-system

wait: false

At this point, if we inspect the created Receiver, we will get something similar to this:

apiVersion: notification.toolkit.fluxcd.io/v1

kind: Receiver

metadata:

creationTimestamp: "2024-05-13T04:13:46Z"

finalizers:

- finalizers.fluxcd.io

generation: 1

labels:

kustomize.toolkit.fluxcd.io/name: webhook-receiver

kustomize.toolkit.fluxcd.io/namespace: flux-system

name: github-receiver

namespace: flux-system

resourceVersion: "3003576"

uid: f5108cac-c669-48d1-bab2-840fbad2b9c9

spec:

events:

- push

- ping

interval: 10m

resources:

- apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

name: flux-system

namespace: flux-system

secretRef:

name: webhook-token

type: github

status:

conditions:

- lastTransitionTime: "2024-05-13T04:13:48Z"

message: 'Receiver initialized for path: /hook/dd69a41a27e2d4645b49b7d9e5e63216a7fdd749f7a2eba9d9e63438dde8b152'

observedGeneration: 1

reason: Succeeded

status: "True"

type: Ready

observedGeneration: 1

webhookPath: /hook/dd69a41a27e2d4645b49b7d9e5e63216a7fdd749f7a2eba9d9e63438dde8b152

GitHub Webhook¶

All is ready for GitHub to notify our cluster on every push to the main branch. Let's create the webhook using TF stack.

variable "github_owner" {

type = string

default = "developer-friendly"

description = "Can be an organization or a user."

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.49"

}

github = {

source = "integrations/github"

version = "~> 6.2"

}

}

required_version = "< 2"

}

provider "github" {

owner = var.github_owner

}

data "aws_ssm_parameter" "this" {

name = "/github/developer-friendly/blog/flux-system/receiver/token"

}

resource "github_repository_webhook" "this" {

repository = "echo-server"

configuration {

url = "https://3fd76690-8601-4894-a6e4-057f62e58551.developer-friendly.blog"

content_type = "json"

insecure_ssl = false

secret = data.aws_ssm_parameter.this.value

}

active = true

events = ["push", "ping"]

}

output "webhook_config" {

value = {

repository = github_repository_webhook.this.repository

url = github_repository_webhook.this.url

}

}

With the webhook set up, for every push to our main branch, the GitHub will trigger a webhook to the Kubernetes cluster, targetting the FluxCD Notification Controller, which in turn will accept and notify the .spec.resources of the Receiver we have configured earlier.

This results in GitRepository resource in the Receiver specificiation to be notified beforethe .spec.interval; the outcome is that we'll get faster deployments of our changes since the cluster and the controllers will not wait until the next reconciliation interval, but will get notified as soon as the changes are landed in the repository.

It's a perfect setup for instant deployment of your changes as soon as they are ready.

Step 5: Notifications & Alert¶

With the application deployed, and the receiver of our GitOps ready to be notified on every new change, we should be able to get notified of info and alerts of our cluster.

This way we get to be notified of normal operations of our clusters, as well as when things go south!

In the following stack, we are creating two different targets for our notification delivery system. One is sending all the info events to our Discord and the other will send all the errors to the configured Slack channel.

It gives you a good diea on how the real world scenarios can be handled when different groups of people are interested in different types and severities of events generated in a Kubernetes cluster.

You may as well mute the noisier info channel, and let the error channel page your ops team as soon as something lands on it.

NOTE: There are two types of events in Kubernetes: Normal and Warning. FluxCD considers normal events as info and warnings as errors. From the official Kubernetes documentation20:

type is the type of this event (Normal, Warning), new types could be added in the future. It is machine-readable. This field cannot be empty for new Events.

You can also check the source code for the Notification Controller in the FluxCD repository21.

apiVersion: v1

kind: Secret

metadata:

name: alertmanager-address

stringData:

address: http://kube-prometheus-stack-alertmanager.monitoring:9093/api/v2/alerts

type: Opaque

apiVersion: notification.toolkit.fluxcd.io/v1beta3

kind: Provider

metadata:

name: alertmanager

spec:

secretRef:

name: alertmanager-address

type: alertmanager

apiVersion: notification.toolkit.fluxcd.io/v1beta3

kind: Alert

metadata:

name: info

spec:

eventMetadata:

severity: info

eventSeverity: info

eventSources:

- kind: GitRepository

name: "*"

namespace: flux-system

- kind: Kustomization

name: "*"

namespace: flux-system

- kind: HelmRelease

name: "*"

namespace: flux-system

providerRef:

name: alertmanager

apiVersion: notification.toolkit.fluxcd.io/v1beta3

kind: Alert

metadata:

name: error

spec:

eventMetadata:

severity: error

eventSeverity: error

eventSources:

- kind: GitRepository

name: "*"

namespace: flux-system

- kind: Kustomization

name: "*"

namespace: flux-system

- kind: HelmRelease

name: "*"

namespace: flux-system

providerRef:

name: alertmanager

resources:

- secret.yml

- provider.yml

- alert-info.yml

- alert-error.yml

namespace: flux-system

And to create this stack:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: notifications

namespace: flux-system

spec:

force: true

interval: 5m

path: ./notifications

prune: true

sourceRef:

kind: GitRepository

name: flux-system

wait: false

Finally:

Kube Prometheus Stack¶

We haven't talked about how to configure the AlertManager to send it's alerts to the corresponding channels, but, for the sake of completeness, and to avoid leaving you hanging, here's full installation of the stack:

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: HelmRepository

metadata:

name: kube-prometheus-stack

spec:

interval: 60m

url: https://prometheus-community.github.io/helm-charts

apiVersion: external-secrets.io/v1beta1

kind: ExternalSecret

metadata:

name: alertmanager-webhooks

spec:

data:

- remoteRef:

key: /slack/developer-friendly/webhooks/alerts

secretKey: slackWebhook

- remoteRef:

key: /discord/developer-friendly/webhooks/info

secretKey: discordWebhook

refreshInterval: 6m

secretStoreRef:

kind: ClusterSecretStore

name: aws-parameter-store

target:

creationPolicy: Owner

deletionPolicy: Delete

immutable: false

apiVersion: helm.toolkit.fluxcd.io/v2beta2

kind: HelmRelease

metadata:

name: kube-prometheus-stack

spec:

chart:

spec:

chart: kube-prometheus-stack

sourceRef:

kind: HelmRepository

name: kube-prometheus-stack

version: 58.x

install:

crds: Create

createNamespace: true

remediation:

retries: 3

interval: 30m

maxHistory: 10

releaseName: kube-prometheus-stack

targetNamespace: monitoring

test:

enable: true

ignoreFailures: true

timeout: 1m

timeout: 5m

upgrade:

cleanupOnFail: true

crds: CreateReplace

remediation:

remediateLastFailure: true

values:

alertmanager:

alertmanagerSpec:

alertmanagerConfigMatcherStrategy:

type: None

Pay close attention to the config matcher strategy highlighted above. This is a known issue; one you can find an extensive discussion on in the relevant GitHub repository22.

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: alertmanager

spec:

receivers:

- name: default-receiver

- name: slack

slackConfigs:

- apiURL:

key: slackWebhook

name: alertmanager-webhooks

optional: false

channel: "#alerts"

sendResolved: true

text: |-

{{ range .Alerts }}

*Alert:* {{ .Annotations.summary }} - `{{ .Labels.severity }}`

*Description:* {{ .Annotations.description }}

*Details:*

{{ range .Labels.SortedPairs }} • *{{ .Name }}:* `{{ .Value }}`

{{ end }}

{{ end }}

title: "{{ .CommonAnnotations.summary }}"

titleLink: "{{ .CommonAnnotations.runbook_url }}"

- name: discord

discordConfigs:

- apiURL:

key: discordWebhook

name: alertmanager-webhooks

optional: false

sendResolved: false

message: |-

{{ range .Alerts }}

*Info:* {{ .Annotations.summary }} - `{{ .Labels.severity }}`

*Description:* {{ .Annotations.description }}

*Details:*

{{ range .Labels.SortedPairs }} • *{{ .Name }}:* `{{ .Value }}`

{{ end }}

{{ end }}

title: "{{ .CommonAnnotations.summary }}"

route:

continue: false

groupBy:

- severity

- revision

groupInterval: 10m

groupWait: 5m

receiver: default-receiver

repeatInterval: 12h

routes:

- matchers:

- name: severity

value: info

receiver: discord

groupWait: 1s

groupInterval: 1m

- matchers:

- name: severity

value: error

receiver: slack

groupWait: 1m

matchers:

- name: severity

matchType: "=~"

value: "critical|warning|error|info"

resources:

- repository.yml

- externalsecret.yml

- release.yml

- alertmanagerconfig.yml

namespace: flux-system

Let's create this stack:

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: kube-prometheus-stack

namespace: flux-system

spec:

force: true

interval: 5m

path: ./kube-prometheus-stack

prune: true

sourceRef:

kind: GitRepository

name: flux-system

wait: false

And apply it:

Bonus: Screenshots¶

The following are the relevant screenshots of the resources we have created and deployed in our Kubernetes cluster.

The commits that the bot will make to the repository will be signed with the GPG Key we have created earlier. This is how it looks like in the GitHub UI:

You can see the alerts triggered as specified in their YAML manifest:

Conclusion¶

That concludes all that we have to cover in this blog post.

I have to be honest with you. When I started this post, I wasn't sure if I'm gonna have enough material to be considered as one blog post. Yet here I am, writing ~20min

I really hope that you have enjoyed and learned something new from this post.

The whole idea was to give you a glimpse of what you can achieve with FluxCD and where it can take you as you grow your Kubernetes cluster.

With the techniques and examples mentioned in this blog post, you can go ahead and deploy your application like a champ.

At this point, we have covered all the advanced topics of FluxCD.

Should you have any questions or need further clarification, feel free to reach out from the links at the bottom of the page.

Until next time

If you enjoyed this blog post, consider sharing it with these buttons

Share on Share on Share on Share on

-

https://kubectl.docs.kubernetes.io/references/kustomize/kustomization/ ↩

-

https://kubernetes.io/docs/concepts/services-networking/ingress-controllers/ ↩

-

https://github.com/fluxcd/image-automation-controller/tree/v0.38.0 ↩

-

https://github.com/fluxcd/notification-controller/tree/v1.3.0 ↩

-

https://fluxcd.io/flux/components/notification/receivers/#type ↩

-

https://docs.github.com/en/authentication/connecting-to-github-with-ssh/managing-deploy-keys ↩↩↩

-

https://docs.github.com/en/authentication/managing-commit-signature-verification/adding-a-gpg-key-to-your-github-account ↩↩

-

https://developer.hashicorp.com/terraform/language/providers/configuration#alias-multiple-provider-configurations ↩

-

https://fluxcd.io/flux/components/source/gitrepositories/#secret-reference ↩

-

https://docs.github.com/en/authentication/keeping-your-account-and-data-secure/githubs-ssh-key-fingerprints ↩

-

https://fluxcd.io/flux/guides/image-update/#configure-image-update-for-custom-resources ↩

-

https://kubernetes.io/docs/reference/kubernetes-api/cluster-resources/event-v1/ ↩

-

https://github.com/fluxcd/notification-controller/blob/580497beeb8bee4cee99163bb63fba679cd2d735/api/v1beta1/alert_types.go#L39 ↩

-

https://github.com/prometheus-operator/prometheus-operator/discussions/3733#discussioncomment-8237810 ↩